Role of ML and it’s Potential for Architectural Design

This will be one of probably many posts as this technology advances and more use cases can be easily shown off.

Many are completely unaware of the revolution happening in machine learning and how it is going to effect every creative industry over the next couple of years. This is not limited to strictly creative professions such as movie makers, artists, and novelty (and absurd) NFT makers. ML tools that can take a prompt of text and output an image/animation/3d model/audio or edit any of those into any of the others. This will reshape how engineers communicate to; clients, contractors out in the field, and to the public about how the buildings we design will look and function.

Communication in Architectural Design and Engineering

When a building is in its infancy an architecture firm is contracted to work with the client/clients and stakeholders to design something appreciable and useful for them. From there the architecture firm works with engineering companies and consultants to flesh out the architects plan and collaborate until everyone involved believes it will be suitable. Contractors are hired, construction begins, problems arise, phone calls, meetings, site visits, emails, more phone calls, more meetings, etc until the building gets finished. Throughout ever step of the process from inception to completion one thing rings out, communication is key. There are few projects that go by where everyone is satisfied with the level of communication on a project.

ML and its tools for content creation and modification have great applications too speed up communication and offer visual clarity to problems that could be faced by everyone involved. Let’s start with the clients who want a building but are not sure what they want, which let’s face it is most clients. Arming them with tools to take their words into images which they can latch onto would be very helpful for the architect. Below I will show off a images generated by a computer based off of a prompt, the prompt will be the only thing I gave to the computer and the image will be what that computer gave in turn back to me. These are the first 9 images I get when I type each prompt I will not filter out and “bad” results. Each image took around 15 seconds to generate and is 512x512 pixels.

Image from Stable Diffusion with the prompt 'lobby atrium with glass and a front desk, lots of light, modern aesthetic, sunlight'

'front of glass and wood building at street level, san francisco city street, blue and white polkadot colored, realistic, front entrance'

'moody wood panel sitting area, shelves of books, comfortable seats'

'new york city office, open floor plan, garden with flowers, glass partitions, archviz, archdaily, dreamy'

The longest part about making all these images was not typing in a prompt, or even letting the computer run to generate them, it was saving them all and putting them into a collage. It truly is that easy to create whatever you want as reference material. Same goes for architects pitching ideas to clients. Architects can now, with the help of a computer, pitch ideas to a client get feedback more quickly. No longer do architects need to waste time rendering images that will only get rejected. Clients and architects can easily and freely use these tools to help each other in the design process. Sure you could go to google images to find references, but sometimes the thing you want doesn’t exist. Like say a hotel on Mars in a lake, and since its cold on Mars lets also make it snowy.

'bright hotel in the middle of a lake on mars, snowing, cold, archviz'

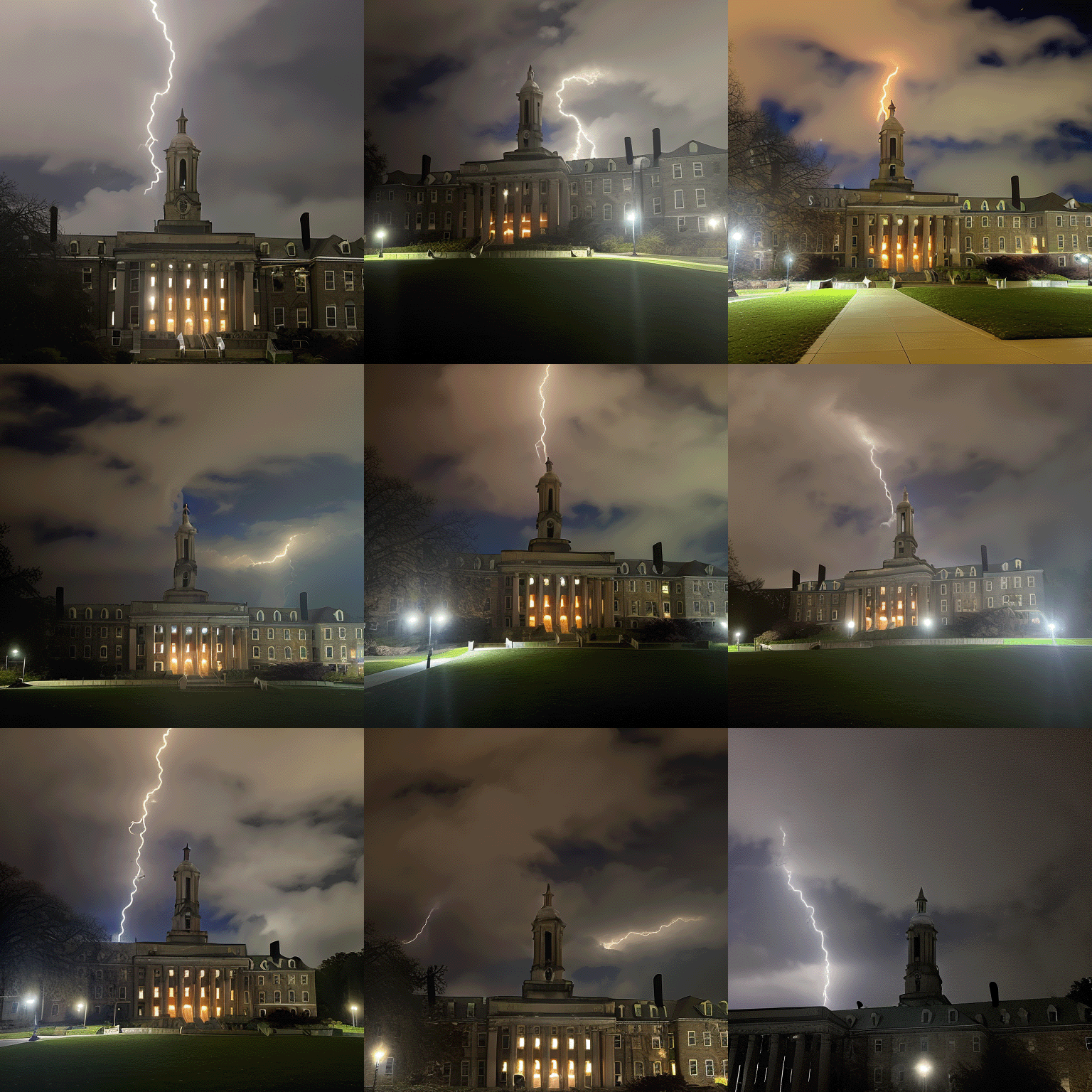

There is one inherit downside to this, anything specific and particular to a building or current project will not show up if I type it in a prompt. Like if I ask for “Old Main at night” I’m not going to get anything useful because the trained model doesn’t know what Old Main at Penn State looks like. It just wasn’t in the data set to a meaningful degree. But if you provide it a handful of images of Old Main and spend some time retraining the model, it will then be able to recognize the words “Old Main” in the prompt and give you what you want. And I did exactly that, I went to the lawn in front of Old Main and took photos across multiple days from multiple angles from different times of day. Then I retrained the model using a Google Colab notebook. Here are some examples of what I was able to accomplish after training it for 4,000 steps, it took about an hour and a half.

The 22 photos I used to retrain the model, so when I ask for Old Main it will give something similar to these. Yes I realize one of the photos is rotated, the model was trained with that photo rotated and it didn’t seem to effect the results.

So the model uses the phrase “old-main” and uses that as the key work to make images similar to the images titled old-main in the training set. I could have chosen anything but “old-main” is convenient. Below are some images I generated with some given prompts, the first few will be creative and fun, the latter will be me trying to push the model to give me more abstract and useful images, but it will struggle with visual clarity and coherence as I try to get further and further away from the training set.

To start, I’ll only ask it to generate images of “old-main” with variable strength. Strength determines how much it’ll conform to the prompt, so a lower value allows the model to be “creative” whereas a larger number will try to conform to the prompt more and be more strict about the result. The seed will be kept the same horizontally to help emphasis this. You can see that a scale between 6-10 is likely my sweet spot for realistic outputs. A scale too high tries too hard to fit the prompt and has unexpected results. Too low it gets the basic idea of what Old Main is but doesn’t try too hard to fill in all the details. These were all done at 20 samples.

.jpg)

Demonstration of the differences of strength for output while using the same "starting point" seed across the row

'old-main struck by lightning, lightning bolt, dark sky, bright lightning bolt'

So what if I ask for a drone shot. One problem, if the model didn’t know what Old Main looked like before then how could it possibly know what surrounds Old Main. The answer is, it does not, so it makes it up. The results are pretty interesting with the one in the middle probably being the closest to being right. What is most impressive is the right wing of the building is mostly occluded by a willow tree in all of my training photos, yet it still assumes (correctly) that Old Main is a symmetrical building.

'looking down on old-main from a drone'

How about I ask for winter photography, my training set doesn’t include any snow in it, so the model has no idea what Old Main looks like under snow, yet it still can figure it out fairly well. I included “black and white photography” to help also gain a sense for style and how it does it’s best but still includes some color in the photos. But for the most part these photos are pretty impressive, even if it struggles with how many pillars Old Main actually has (the correct answer is eight)

'old-main in a blizzard, historic blizzard photograph, black and white photography'

But for instance if my prompt is “old-main as a gothic cathedral” the only things that seems to change is the focus goes to the clock tower and some of the images have a cross on top. Interesting result. Another thing to note is from all these 3x3 grids, these are the first 9 images it generates, I’m not handpicking them. Also it takes around 1 minute to get these 9 images, so it wouldn’t be much of a problem to just run it again with a tweaked prompt for better results.

'old-main as a gothic cathedral'

For this prompt I had to turn the strength down to 1.5, as my prompts get more abstract I have to lower the strength and allow more degrees of freedom, otherwise I just get a regular old image of Old Main and nothing similar to my prompt. So this is what I get when I ask for “old-main covered in moss vines and flowers, green architecture, solarpunk, archdaily”. There is noticeably more greenery, but most of the images are not quite usable for anything.

'old-main covered in moss vines and flowers, green architecture, solarpunk, archdaily'

The inherent problem with prompts that “ask too much” comes from a couple of things. I used a faster method to train the model. This decreases the floating point precision of the training by half, which was a necessity to decrease the GPU VRAM requirement to below a Google Collabs maximum capability. Otherwise doing this would no longer be free and would cost money towards a subscription service. Also a more rigorous method would include hand labeling the images. Essentially I trained the computer to know what photos of Old Main look like and not what is in the photos of Old Main. Yes, the model has a sense of what a clock tower is and what a portico is, but not the essence of what they are. It as if I gave a bunch of photos of New York City to an alien who has never been to Earth, sure they may become great at reproducing those images and creating similar variations, but if you ask them what is in those photos or to change it to look more like Chicago they’ll have no idea what you are talking about. This is what the model is doing. By taking more closeups and labeling those photos differently, like “portico of Old Main” or “close-up of clock tower on Old Main” would allow for more defined prompts. At the same time also letting the model train for much longer than 4,000 steps would improve results. Upwards of 20,000 would be more ideal but takes proportionally longer. These are all problems that can be addressed but will continue to be barriers to entry for many people in architectural design and construction. However this will change.

Last year this level of fidelity wasn’t possible. 8 months ago it became possible but cost money. 2 months ago it became open sourced and accessible. And in the past month or so methods have become easy to use. I’m no programmer, I am a somewhat busy student and did this in my free time. Give actual programmers more time and money and I promise this will become 10x better in the coming years. I have not even discussed all the other uses this has. Look it up, just search Stable Diffusion, Dall-e, generative images and if you can’t think of a way this will be useful for architectural design or construction you are not thinking hard enough. I promise you that.